Tech Juice 2504: What is 'artificial' in Artificial Intelligence and why is the process of decision making not clearly explainable?

Question: What is artificial in Artificial Intelligence and why is the process of decision making not clearly explainable?

Motivation: Is AI really intelligence? What is the technology that has enabled this revolution? Do we know how the decisions are made?

'Artificial' in AI

In the context of artificial intelligence (AI), the term "artificial" refers to the fact that the intelligence exhibited by machines is not natural or biological, but is instead created or simulated by humans using algorithms, models, and computational systems.

To break it down:

- Artificial: This implies that the intelligence is man-made, not a product of nature or evolution. In AI, the goal is to create machines or systems that can perform tasks that would typically require human-like intelligence, such as learning, problem-solving, decision-making, understanding language, and recognizing patterns.

%20Is%20Used%20In%20Biometrics.jpg)

-

Intelligence: This refers to the ability to think, reason, understand, and make decisions. In AI, it involves mimicking aspects of human cognition such as perception, learning, memory, and problem-solving.

In essence, "artificial" signifies that the intelligence is generated through technological processes, rather than being a natural or organic phenomenon. AI systems are engineered to simulate certain aspects of human intelligence using data, computational models, and algorithms.

Core technologies that have made the AI revolution possible

The main invention that has made modern AI possible is the artificial neural network, particularly the development of deep learning algorithms. While there have been many foundational contributions in the field of AI, the invention and evolution of neural networks have been key to making AI systems capable of solving complex problems like image recognition, natural language processing, and autonomous decision-making.

Here's how neural networks have played a critical role in making AI possible:

Artificial Neural Networks (ANNs)

- Artificial Neural Networks (ANNs) are computational models inspired by the structure of the human brain, composed of layers of interconnected "neurons." Each neuron processes information and passes it to the next layer, with weights and biases applied to determine the importance of the information. The ability to adjust these weights during training allows the network to learn patterns from data.

- While the concept of artificial neurons dates back to the 1940s and 1950s (e.g., the perceptron by Frank Rosenblatt), significant progress has been made in training deeper, more complex networks.

Deep Learning (Deep Neural Networks)

- Deep Learning refers to the use of deep neural networks, which are networks with many hidden layers of neurons. These deep networks allow AI systems to automatically learn features from raw data, such as images or audio, without requiring manual feature extraction or explicit programming.

.png?width=850&auto=webp&quality=95&format=jpg&disable=upscale)

- The breakthrough came with the ability to train very deep networks using large datasets and high computational power. Innovations in algorithms and hardware, such as back-propagation (for adjusting weights based on errors) and Graphics Processing Units (GPUs) for faster computation, allowed these networks to be trained more effectively.

Key Innovations For Neural Networks and Deep Learning

- Back-propagation: This algorithm, developed in the 1980s, is key for training neural networks. It allows the network to learn by adjusting the weights of neurons based on the error of the output compared to the expected result.

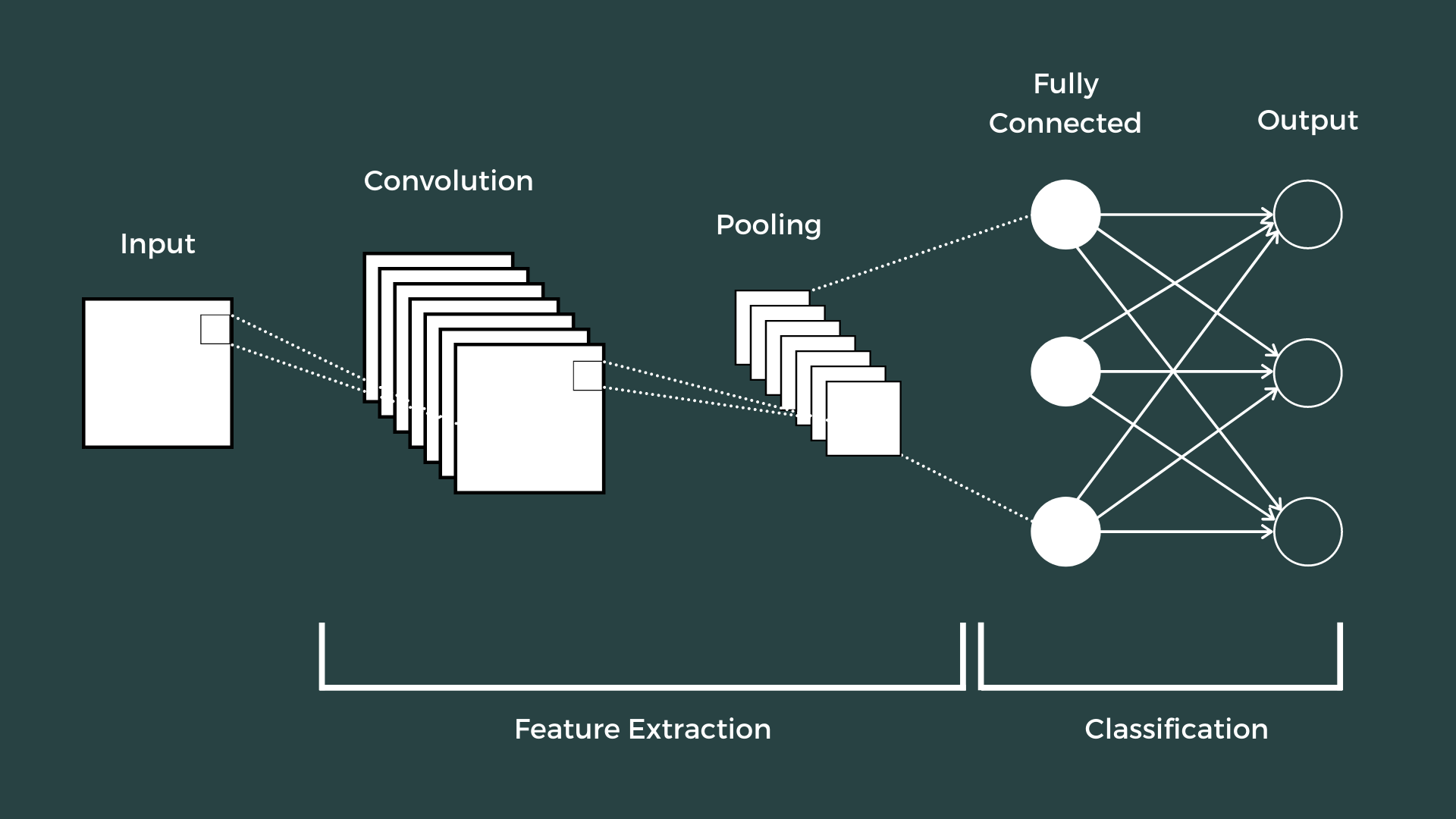

- Convolutional Neural Networks (CNNs): Introduced for tasks like image recognition, CNNs are specialized neural networks that can automatically detect and learn spatial hierarchies of features in visual data. They are behind advancements in computer vision.

- Recurrent Neural Networks (RNNs): Used for sequence-based tasks, such as language translation or speech recognition, RNNs allow information to persist in "memory" and can process sequential data.

Big Data and Computational Power

- Big Data: The availability of vast amounts of data, from images to text to sensor readings, is crucial for training deep learning models. AI systems require large, high-quality datasets to learn and generalize effectively.

- GPU Acceleration: The development of GPUs, initially designed for graphics rendering, has been a game-changer for training deep learning models. GPUs can perform many calculations in parallel, making them much faster for AI model training compared to traditional CPUs.

Other Key Contributions

- Support Vector Machines (SVMs): In the early 2000s, SVMs were another influential approach to machine learning, particularly in classification tasks, although they were eventually overshadowed by the rise of deep learning.

- Reinforcement Learning: While not as widely applied as deep learning, reinforcement learning has enabled significant advancements, especially in areas like game playing and robotics (e.g., AlphaGo, OpenAI Five).

While AI's development is the result of many contributions, artificial neural networks, particularly deep learning, are the main invention that has made modern AI possible. These models, when combined with large datasets and powerful computational resources, have enabled AI systems to achieve remarkable feats across diverse fields, from natural language processing to autonomous driving.

Explainability of the decision-making process in AI

Explainability of the decision-making process of AI is important for many critical applications that may involve human safety.

AI, especially modern machine learning (ML) models, is often not readily explainable due to several factors related to the complexity of the models, the nature of the data, and the methods used to train and deploy these systems. Here are some key reasons why AI can be difficult to explain:

Complexity of Models (Black-box Nature)

- Deep Learning: Many AI systems, particularly those based on deep learning (e.g., neural networks), consist of numerous layers of interconnected nodes that process data in highly complex ways. Each layer in a deep neural network transforms the data in a non-linear way, making it difficult to trace exactly how the input data is transformed into the output. This results in what is known as a "black-box" model, where the internal workings of the system are not easily interpretable by humans.

- Large Models: AI systems, especially in fields like natural language processing or image recognition, are often based on very large models with millions or even billions of parameters. The sheer number of parameters and interactions between them makes it hard to explain why a model made a particular decision.

Non-linearity

- Many machine learning models, especially those used in AI, operate on non-linear functions. Non-linear relationships are much harder to interpret compared to linear ones because small changes in input can lead to disproportionately large or unpredictable changes in the output. This lack of transparency in how input data is transformed into a decision or prediction makes the AI's reasoning difficult to explain.

Training on Huge Datasets

- AI models are often trained on large and diverse datasets, sometimes containing millions of examples. These datasets can introduce a level of statistical complexity that makes the decision-making process of the AI hard to follow. The model might learn patterns or correlations that are not immediately apparent or understandable to humans, especially if the dataset is biased or noisy.

Feature Interactions

- In many machine learning models, multiple features (inputs) interact in complex ways, and the contribution of each feature to the final decision may not be clear-cut. For example, a machine learning model used for predicting credit scores might rely on a complex interaction of features like income, credit history, spending behavior, and even indirect factors like social behavior, all of which contribute to the final outcome. Understanding these interactions in a simple, human-readable way can be challenging.

Lack of Clear Causality

- AI models often identify correlations in the data rather than explicitly capturing causal relationships. For instance, a model may predict that a customer is likely to churn based on certain behavior patterns, but it might not be clear why those behaviors cause the churn. This lack of causality in explanations makes it difficult to provide intuitive or actionable insights into why a particular decision was made.

Uncertainty and Probabilistic Nature

- Many AI systems, particularly those used in machine learning, operate probabilistically. For example, a model might predict that an event has a 70% chance of occurring. However, the probability alone does not provide a clear, understandable explanation for why the model made that prediction. Instead, it reflects the model’s confidence based on patterns it has learned, which might be difficult to interpret directly.

Trade-off Between Accuracy and Explainability

- There is often a trade-off between model accuracy and explainability. More complex models like deep neural networks or ensemble methods (e.g., random forests, gradient boosting) can achieve higher accuracy, but they are harder to interpret. Simpler models, like decision trees or linear regression, are more interpretable, but they may not perform as well in terms of predictive accuracy. This balance complicates the creation of AI systems that are both high-performing and easily explainable.

AI is Context-dependent

- The explanations of AI models often depend on the context in which they are applied. For example, a model that works well for predicting whether a customer will buy a product based on past behavior may behave differently when applied to another domain, like diagnosing medical conditions. Understanding why a model works in one domain doesn't necessarily transfer easily to another, and thus the explanations need to be adapted to each specific application.

Lack of Standardization

- There is no universal method for interpreting or explaining AI decisions. Different types of models, data, and applications require different explainability techniques. Methods like LIME (Local Interpretable Model-Agnostic Explanations), SHAP (Shapley Additive Explanations), and counterfactual reasoning are all attempts to provide explanations, but they are not universally applicable across all AI systems. The lack of standardized approaches means explanations can vary widely, further complicating the understanding of AI behavior.

Comments

Post a Comment